- Attrove

- Posts

- The Leverage Trap

The Leverage Trap

When your AI is idle, are you failing?

The New Guilt

Here's something I posted recently that's been rattling around in my head:

Read that again. I'm feeling guilty because my software is resting.

There's an irony here that I can't shake: these tools have given us unprecedented leverage, yet somehow I feel like I'm not working hard enough. As I wrote back in December, there's a constant sense of being behind; but now the goalposts have moved. It's no longer about keeping up with the industry. It's about keeping up with the machine sitting in my terminal.

The Time Horizon Explosion

This feeling isn't irrational. It's a response to something real.

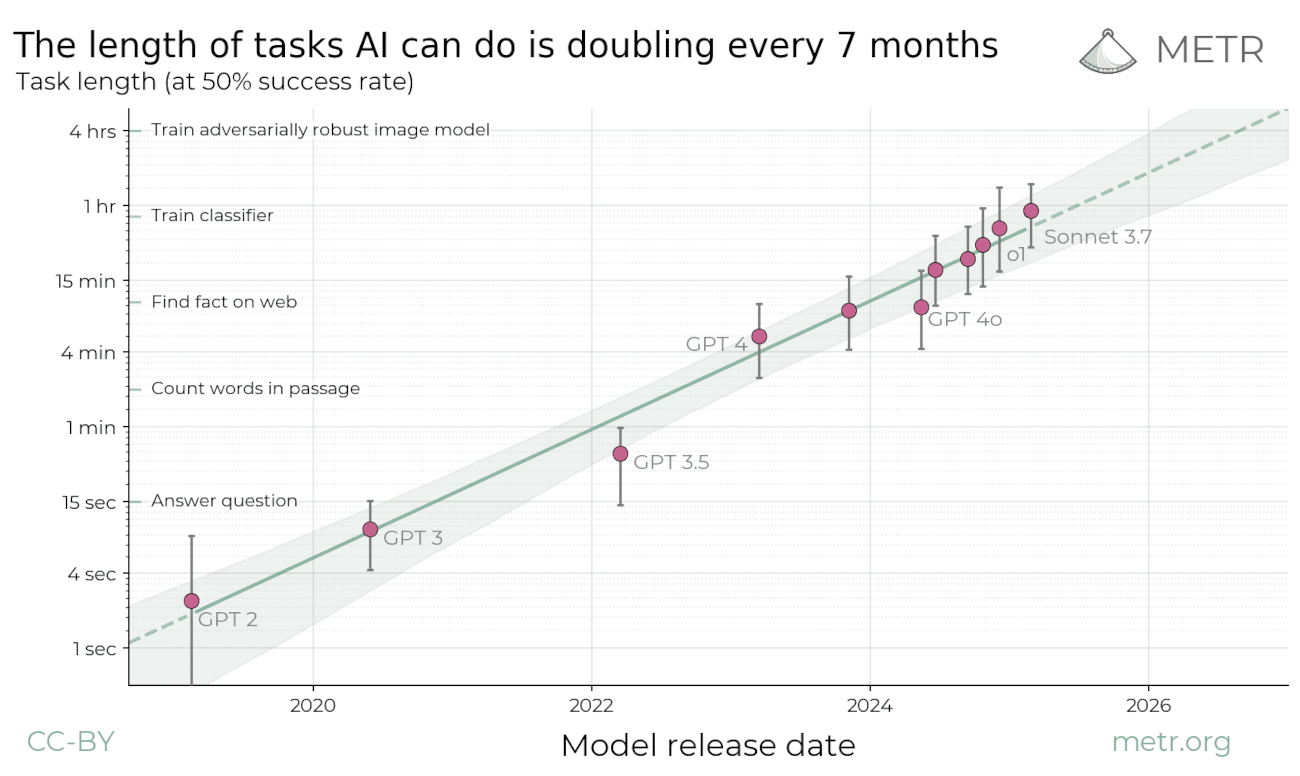

Researchers at METR recently published a striking finding: the length of tasks AI agents can complete autonomously has been doubling every 7 months since 2019 — and it’s accelerating: down to 4 months as of late 2024.

Think about what this means. In 2019, frontier AI could reliably handle tasks that took humans a few seconds. By early 2025, that metric was roughly 50 minutes. By the time you're reading this, we're pushing toward an hour of uninterrupted autonomous work.

This isn't Moore's Law for transistors. This is Moore's Law for how long AI can work without you.

And unlike chip density, this directly translates to output. The force multiplier available to you, right now, is compounding exponentially. Every few months, the multiplier on your effort increases. No wonder we feel behind. We're trying to keep pace with an exponential curve using linear intuition.

Claude Code as the Perfect Cover Story

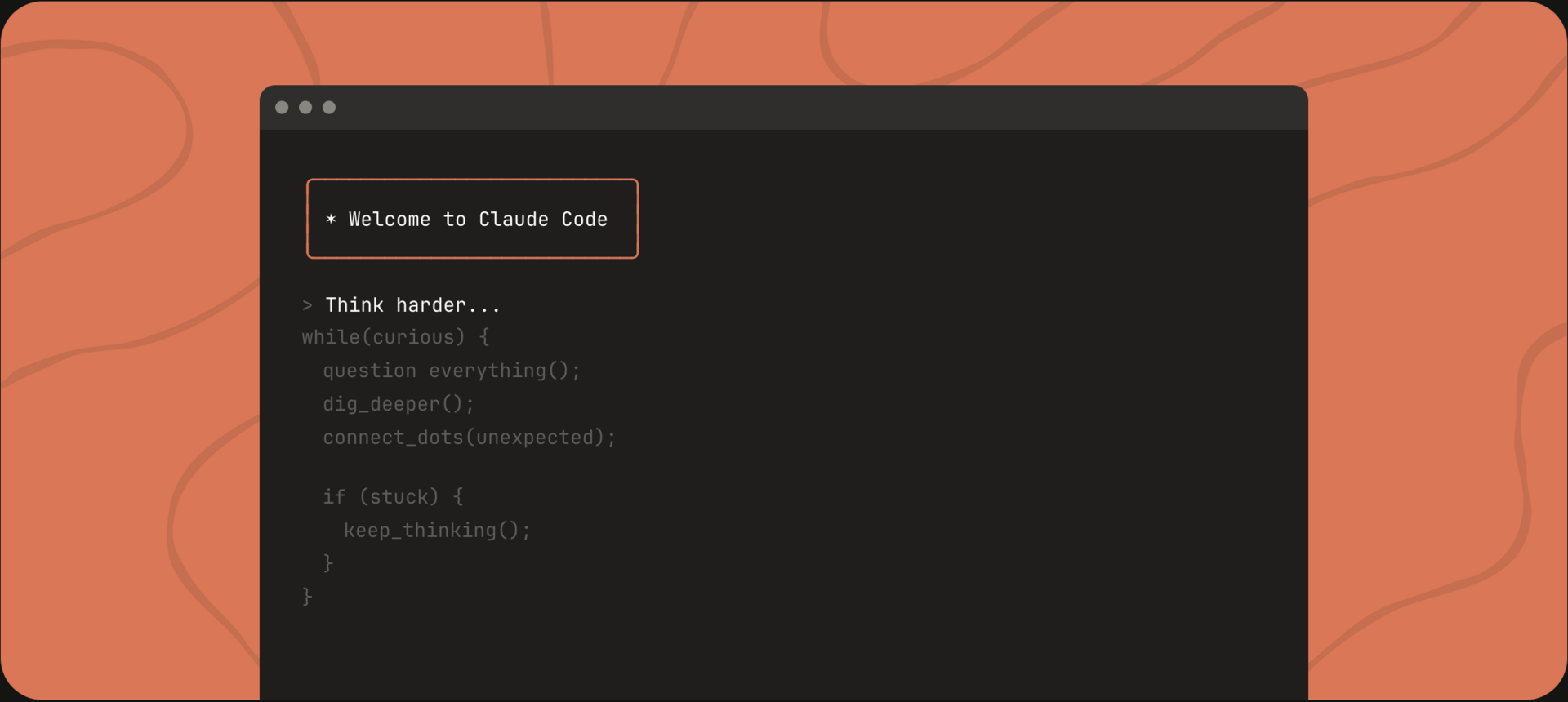

Claude Code brings AI to the terminal

Recent developments with Claude Code make this visceral in a way that ChatGPT never did.

With ChatGPT, you go to it. You open a tab, you ask a question, you leave. It's transactional. But Claude Code? It lives with you. It sits there in your terminal, cursor blinking, waiting. Its idle state is visible. Constant. Almost judgmental.

Over the past several weeks, I've settled into a rhythm: kick off Claude Code on a task, let it run in the background while I do other work, check back in 15-20 minutes, and review what it's built. The results are often incredible. I handle the human stuff (like product strategy, customer conversations, design taste) while delegating the technical implementation.

It's genuinely powerful. But there's a dark side: I can see when nothing's queued. And when the agent is idle, some part of my brain whispers that I'm leaving potential on the table.

The Utilization Mindset (and Why It's Toxic)

This feeling has a name. During my years at Apple, every factory visit hammered home the same mantra: max utilization. Machines are expensive. Downtime is waste. Keep the lines running.

That logic makes perfect sense when resources are scarce and fixed. But what happens when the resource becomes effectively infinite?

You become the bottleneck.

The question stops being "Is the agent busy?" and becomes "Am I working on the right problems?" And that's a much harder question to answer; thus, we default to the easier one: we keep the agent fed. We measure our productivity by its activity. We mistake utilization for impact.

Here's the sobering part: this anxiety is everywhere. A recent EY survey found that 54% of workers feel like they're falling behind their peers in AI use. Among non-managers, it's 61%. We're not imagining this pressure. We're all feeling it.

The Paradox

So here's the punchline, and it's a strange one:

The technology that gives us massive leverage somehow makes us feel more behind.

We've seen versions of this before. Email was supposed to save time: instead it created an infinite inbox. Smartphones were supposed to free us from our desks: instead they chained us to notifications. But this time feels different. Those were linear increases in connectivity. This is an exponential increase in capability.

The gap between "what you could be doing" and "what you are doing" is widening faster than you can close it. Every month, the theoretical ceiling rises. And if you measure yourself against that ceiling, you'll always come up short.

That's the leverage trap: the more power you have, the more you feel you're wasting.

What I'm Trying Instead

I don't have this figured out. But here's what I'm experimenting with:

Reframe the agent's job. Its purpose isn't to stay busy—it's to multiply my output. Those are different things. A well-directed 30-minute task beats eight hours of unfocused delegation.

Protect the human work. Judgment, customers, taste… these aren't the leftovers after you've delegated everything else. They're the critical path. The bottleneck worth protecting. The quality of your direction matters far more than the quantity of tasks you spawn.

Resist the utilization mindset. The agent being idle isn't a failure. Sometimes the best move is thinking before you type.

Having all the answers was never the end game. Knowing what to build, and why—that's the bet I'm placing.

The machine can stay busy. I'm trying to stay focused.

The Future of Shopping? AI + Actual Humans.

AI has changed how consumers shop, but people still drive decisions. Levanta’s research shows affiliate and creator content continues to influence conversions, plus it now shapes the product recommendations AI delivers. Affiliate marketing isn’t being replaced by AI, it’s being amplified.